Fabric Data Engineer (DP-700) Exam Suggested Study guide

As part of my prep for DP-700 (Fabric Data Engineer), I turned my study notes into a lightweight cheat sheet and collected the exact sources I used along the way

Setting the Stage

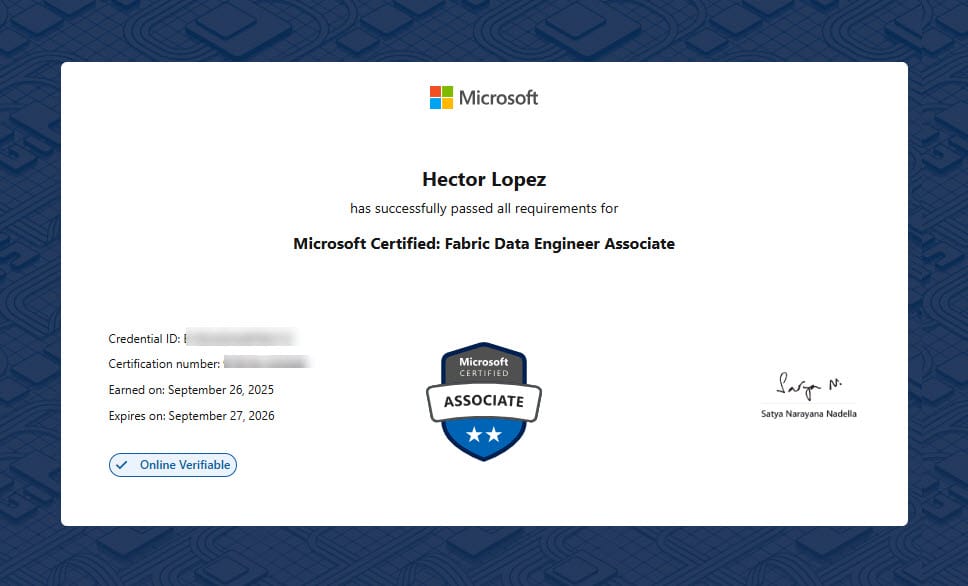

As part of my prep for DP-700 (Fabric Data Engineer), I turned my study notes into a lightweight cheat sheet and collected the exact sources I used along the way. And yes—I passed 😉 (it was tougher than I expected 😅).

This exam assumes you’re a practitioner: you need real hands-on experience across Fabric and the vocabulary to talk about it. If someone says “SCD Type 2,” you should know exactly what that means—and how you’d implement it.

To keep things focused, I split the guide into two complementary parts:

- Practice resources — the modules and labs I used to build muscle memory.

- Theory cheat sheet — quick bullets that reinforce terminology

Skim the theory, then cement it with the hands-on pieces. That combo is what made the difference for me.

Practice

Course DP-700T00-A: Microsoft Fabric Data Engineer

This course covers methods and practices to implement data engineering solutions by using Microsoft Fabric. Students will learn how to design and develop effective data loading patterns, data architectures, and orchestration processes.

Getting Started w/Real-time Intelligence

Welcome to the Fabric Real-Time Intelligence Hands-on Lab Series — a practical, step-by-step guide designed to help you understand Microsoft Fabric's Real-Time Intelligence features through applied exercises and realistic business scenarios.

Theory (summary)

Implement & Manage an Analytics Solution

Workspace & Access Basics

- Roles: Admin all permissions; Member manages except permissions; Contributor creates; Viewer has view-only access

- Item permissions (Lakehouse/Warehouse):

- Read (all SQL) = can connect to the SQL endpoint

- Read All = can read OneLake files via Spark, Pipelines & subscribe to events

- Build = can build reports on the default semantic model

- Item permissions (only for Warehouse)

- ReadData = can SELECT on all tables/views

- Monitor = can access dynamic management views (DMV)

- Reshare = shared the granted permission with other

- Domains: group workspaces for ownership, discovery & governance; support data mesh architecture; roles for domains management: Fabric admin (Create domains), Domain admin (Business owners/Designated experts) and Domain Contributor (Workspace admins); If require enable tenant setting Allow tenant and domain admins to override workspace assignments (preview)

Spark: Pool vs Environment

- Pool = hardware (node family/size; Autoscale nodes).

- Environment = runtime & libs (Spark version, libraries, Spark properties; can be default for a workspace).

- Autoscale vs Dynamic allocation:

- Autoscale caps machines (nodes).

- Dynamic allocation caps workers (executors) per job.

- In Fabric pools: 1 executor per worker node; node count includes the driver.

App. Lifecycle management (ALM) & Deployment

- Git: connect workspace; commit/sync supported items.

- Database projects: schema-as-code for Fabric items.

- Deployment pipelines: Dev → Test → Prod; mind rules/variable-libraries per stage.

Security & Governance

- SQL Engine-level controls:

- Object level security (OLS) - ADD/REVOKE roles (db_datareader, db_datawriter, db_owner, etc.) & GRANT/DENY permissions (select, insert, alter, control, etc.)

- Dynamic Data Masking (DDM) - hide sensitive columns for non-privileged users at query time; grant UNMASK to only authorized users; masking rules:

- default(). full masking according to the data types

- email(). first letter of an email and the constant suffix ".com"

- partial(prefix, [padding], suffix). first and last 'n' characters + custom padding string in the middle (aka Custom String)

- random(low, high). Replaces value with a random number within a specified range

- Row-level security (RLS) - transparent to the user and is enforced automatically by SQL; implemented in two main steps 1) Filter predicate in the form of an inline table-valued function, 2) Security policy

- Column level security (CLS) - implement column-level security as follow 1) Identify the sensitive columns, 2) Define access roles, 3) Assign roles to users, 4) Implement access control GRANT/DENY

- Privacy labels: Rules that control cross-source data movement during query folding/combination

- None = no protection against cross-source value leakage

- Public = folding across sources is allowed.

- Organizational = Can combine with Organizational; won’t send values into Public

- Private = won’t pass values from this source to any other (not even another Private sources)

- Endorsement: Find high-quality, trust-worthy content and data

- Promoted = ready for sharing and reuse.

- Certified = meets the organization's quality standards

- Master data = core source (authoritative, single source of truth)

- Workspace logging: enable Workspace monitoring (KQL DB of item logs).

Orchestrate processes

- Data Factory:

- Using parameters and string interpolation in the data factory pipelines for dynamic content

- Run (manual), schedule (based on time and frequency), or use events (when specific events happen in Jobs/OneLake/Workspaces/Azure or Business) to trigger a pipeline

Ingest & Transform Data

Load Patterns

- Full vs Incremental: prefer watermark/if needed Change Data Capture (CDC); land to Delta; MERGE in Warehouse/Lakehouse.

- Dimensional prep: staging → dims (SCD) → facts with surrogate keys.

- SCD Type 0 (Fixed): never changes (e.g., BirthDate).

- SCD Type 1 (Overwrite): in-place update; no history (good for corrections).

- SCD Type 2 (History): new row + new surrogate key on change

- SCD Type 3 (Previous Value): keep limited prior values in extra columns (one step back).

- SCD Type 4 (History Table): current row stays in dim; all changes live in a separate history table.

- SCD Types 5 & 6. Combination of other types; rare, just to mention

Choose the Right Tool/Store

- Store: Lakehouse (Delta & Spark), Warehouse (T-SQL), Eventhouse (KQL/time-series).

- Tooling: Dataflow Gen2 (Power Query to Delta/Warehouse), Pipelines (orchestration/copy), Notebooks (PySpark/SQL), KQL (RTI), T-SQL (Warehouse).

OneLake Integration

- Shortcuts = virtualize (no copy).

- Mirroring. replicate external DB to OneLake as Delta (continuous).

- Database mirroring = allows replication of entire databases (SQL, PostgreSQL, Snowflake, other RDMS)

- Metadata mirroring = synchronizes metadata (Databricks)

Streaming (Real-Time Intelligence)

- Event Sources: Azure Event (Hubs, IoT, Storage) / RDBM's featuring Change Data Capture (CDC) / Sample / Fabric Events (Onelake, Jobs) / Third party (Kafka, Google)

- Transformation Operations in Event processor (no-code + SQL)

- Filter = filter events based on the value of a field

- Manage fields = allows you to add, remove, change data type, or rename fields

- Aggregate = calculate an aggregation (Sum, Min, Max, or Avg)

- Group by = calculate aggregations (Sum, Min, Max, Avg, Count Percentile, Std Dev), across all events within a certain time windows

- Tumbling (non-overlapping) — fixed windows (e.g., every 5 min)

- Session (non-overlapping) — stays open while events for a key keep arriving; it closes after X minutes of inactivity.

- Snapshot (non-overlapping) — an instantaneous “as-of”

- Hopping (overlapping) — slide by a hop (e.g., size 10 min, hop 5 min)

- Sliding (overlapping) — event-driven windows of length W that conceptually recompute on every event over the last W

- Join = combine events from two inputs (Inner join/Left outer join)

- Union = connect two or more nodes and add events that shared fields

- Expand = create a new row for each value within an array.

- SQL code = Define custom data transformation logic using simple SQL expressions.

- Event Destinations: Derived Stream / Evenhouse (Kqldb) / Lakehouse (Delta) / Activator/ Custom endpoint

Work with Delta Lake tables

- Delta table as a streaming source - Use Spark Structured Streaming to process data in real-time

- Only append operations can be included in the stream; specify the ignoreChanges or ignoreDeletes options to prevent errors due to data modifications.

- Check that the stream is streaming by using the isStreaming

- CheckpointLocation option is used to write a checkpoint file that tracks the state of the stream processing

- Use Spark Job Definition with Spark Structured Streaming (python) to land data in a lakehouse and then serve it through a SQL analytics endpoint.

T-SQL

- Window functions = compute values per row over a window: OVER (PARTITION BY ... ORDER BY ... [frame]).

- ROW_NUMBER(): unique sequence (no ties). Great for dedupe (pick latest per key).

- RANK(): ties share the same rank, and the next rank skips numbers (1,1,3,…)

- DENSE_RANK(): ties share rank, no gaps (1,1,2,…).

- LAG/LEAD: prior/next row in the ordered window (e.g., day-over-day).

- NTILE(n): splits ordered rows into n buckets (quantiles).

Monitor & Optimize an Analytics Solution

Monitoring & Alerts

- Monitor hub: runs for pipelines, dataflows, notebooks, semantic models.

- Workspace monitoring: KQL DB of logs/metrics across items.

- Alerts in the Microsoft Real-Time hub: provides real-time monitoring and alerting capabilities for data ingestion and transformation processes.

- Warehouses

- Dynamic Management Views (DMVs) - offer real-time insight into query activity

- sys.dm_exec_requests - tracks current running queries

- sys.dm_exec_sessions - List active sessions

- sys.dm_exec_connections - Shows active client connectionss

- Query Insights Views - specialized views to track query and session behavior

- queryinsights.exec_request_history - Tracks historical query executions

- queryinsights.exec_session_history - Tracks session metadata

- queryinsights.frequently_run_queries - IDentified most commonly executed queries

- queryinsights.long_running_queries - Lists slowest queries for optimization

- Dynamic Management Views (DMVs) - offer real-time insight into query activity

Troubleshooting (common places)

- Pipelines (run history/activity output), Dataflows (refresh history), Notebooks/Spark job definitions (logs), Eventstream (Data insights metrics & Runtime logs [warning/error/info]), Eventhouse (Monitor workspace), T-SQL (error messages/DMVs).

- Fix spark job failures due to incompatible library versions after updating a Spark runtime by 1) Update library versions 2) Republish environment

Performance Tuning

- Lakehouse (Delta):

- OPTIMIZE (compact) consolidates small Parquet files into larger files,

- Apply V-order (Slows Optimize but provides 50% more compression)

- VACUUM (clean), remove files that are no longer referenced and that are older than the retention threshold

- Pipelines: parallelize where safe, partitioned reads, pushdown, staged copy.

- Warehouse: stats & distribution, result size, predicate pushdown; use time-travel only for validation.

- Manually create statistics for frequently queried tables.

- Use Direct Lake mode in Power BI.

- RTI (Eventstream/Eventhouse): tune batching/throughput; check ingestion mappings.

- Spark settings:

- Pick proper node size; Autoscale + Dynamically allocate executors

- Use Memory optimized nodes family

- Enable Reserve maximum cores for active Spark jobs ensures that Spark jobs have sufficient resource

- Use high concurrency mode allows multiple users to share the same Spark session

- Enable Native execution engine to accelerate Spark jobs;

- Capacity: balance job size vs concurrency.

- OneLake shortcut caching = Reduce egress costs and latency when reading data via external shortcuts

- Retention: Choose 1–28 days per workspace. Each access resets the timer. Files that aren’t touched past retention are purged.

- Per-file limit: Files > 1 GB aren’t cached.

- When to ...

- Enable - You frequently read the same external files and want to cut egress and latency / Data is relatively stable or you’re okay relying on the freshness check (remote newer → refresh)

- Disable - Your files are typically >1 GB (no benefit) / Data changes constantly and you always need the absolute latest

Call to action

If you’ve taken DP-700 (or are studying now), help make this guide better for everyone. Drop suggestions in the comments with:

- missed topics or clearer phrasing

- real-world gotchas and edge cases

- tiny code/SQL/KQL snippets that clarified a concept

- links to high-quality modules, labs, or demos

I’ll review and fold great tips back into the article—crediting contributors.